Information and download from http://code.google.com/p/multi-mechanize/

UPDATE 14th March 2012: now moved to http://testutils.org/multi-mechanize

Multi-Mechanize is an open source framework for API performance and load testing. It allows you to run simultaneous python scripts to generate load (synthetic transactions) against a web site or API/service.

In your scripts, you have the convenience of mechanize along with the power of the full Python programming language at your disposal. You programmatically create test scripts to simulate virtual user activity. Your scripts will then generate HTTP requests to intelligently navigate a web site or send requests to a web service.

…

You should be proficient with Python, HTTP, and performance/load testing to use multi-mechanize successfully.

Installing Multi-Mechanize

First time around I got this horribly wrong, probably through not being proficient with…. actually my problem was installing Python 3.2 for Windows 64 bit; it turns out Multi-Mechanize only works with Python 2.6 and 2.7, and if you install the wrong version you get loads of syntax error messages; so I had to start again. Here is the correct sequence:

-

First install Python 2.7

http://www.python.org/download/releases/2.7.2/

specifically

Windows X86-64 MSI Installer (2.7.2) [1](sig)

-

To install mechanize, download

http://pypi.python.org/pypi/distribute#distribute-setup-py

and run

C:\Python27\python C:\blah\Downloads\distribute_setup.py

then

C:\Python27\scripts\easy_install mechanize

-

Install Numpy from http://www.lfd.uci.edu/~gohlke/pythonlibs/#numpy

specifically numpy-MKL-1.6.1.win-amd64-py2.7.exe [8.7 MB] [Python 2.7] [64 bit] [Oct 29, 2011]

-

Then install Matplotlib from http://www.lfd.uci.edu/~gohlke/pythonlibs/#matplotlib

specifically matplotlib-1.1.0.win-amd64-py2.7.exe [4.4 MB] [Python 2.7] [64 bit] [Oct 06, 2011]

-

Finally download Multi-Mechanize

http://code.google.com/p/multi-mechanize/downloads/list

and copy the unzipped folder somewhere (no installation).

Using Multi-Mechanize

It’s pretty simple. Use a command like this to start a session:

>c:\python27\python multi-mechanize.py testMySite

This refers to a “project” (in this case “testMySite”) which is a folder under \projects under your Multi-Mechanize folder. To create a project I just copied and adapted the “default_project” that came in the download.

In the project folder there is a config.cfg text file that specifies some global settings and some details about what scripts to run on and how many simultaneous sessions to run. There is some sparse information on the config settings here: http://code.google.com/p/multi-mechanize/wiki/ConfigFile

You can specify any number of “user groups”, each representing one sequence of actions on your web site/service (e.g. loading the home page, or executing a search). Each user group is associated with a python script defining the actions. Each user group has a number of threads, i.e. virtual users attempting the sequence of operations.

TODO: insert some information about the actual Python script - how to perform simple and more complex operations on a web site.

The global settings include how long to run the test for, the time series interval for results analysis, and how long to “rampup” the number of users, presumably from one up to the maximum number of threads specified in the user groups. The ramp-up is to see within one test how the site performs under low and then progressively higher loads.

The results of the test are output into a folder below the project, with a time-stamped name like \results\results_2011.12.27_16.32.07

This contains the raw results as CSV, plus some graphs as PNG images, and an HTML page that brings the numbers and images together into a report.

Example tests

I ran simple tests on the following sites:

-

www.esdm.co.uk (main exeGesIS web site built in DotNetNuke and running on one of our older Telehouse servers)

-

www.esdm.no-ip.co.uk (draft replacement exeGesIS web site built in mojoPortal and running on our newer Telehouse hosting stack)

-

www.breconfans.org.uk (a site I run at home, using mojoPortal on Arvixe hosting)

-

www.wikipedia.org (a high availability global site as a control)

The test script simply loaded the home page and checked for some text on the page. I tested with two user groups undertaking the same actions, with 50 virtual users in each, ramping up throughout the 5 minute test period.

www.esdm.co.uk

Timer Summary (secs)

|

count |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

1088 |

0.480 |

12.897 |

19.550 |

23.866 |

28.932 |

88.588 |

9.897 |

Interval Details (secs)

|

interval |

count |

rate |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

1 |

105 |

3.50 |

0.480 |

1.613 |

2.120 |

2.290 |

2.630 |

4.190 |

0.711 |

|

2 |

82 |

2.73 |

1.992 |

4.856 |

6.574 |

8.324 |

9.834 |

11.624 |

2.251 |

|

3 |

101 |

3.37 |

3.000 |

6.962 |

9.360 |

11.960 |

13.194 |

14.610 |

3.095 |

|

4 |

110 |

3.67 |

2.830 |

9.231 |

12.650 |

17.330 |

18.070 |

30.890 |

4.900 |

|

5 |

89 |

2.97 |

4.964 |

13.425 |

17.822 |

20.912 |

22.222 |

28.932 |

5.095 |

|

6 |

114 |

3.80 |

4.912 |

14.126 |

18.792 |

22.286 |

24.210 |

48.924 |

7.163 |

|

7 |

117 |

3.90 |

4.670 |

14.342 |

18.290 |

20.986 |

23.958 |

58.470 |

6.999 |

|

8 |

92 |

3.07 |

5.402 |

19.430 |

24.054 |

28.328 |

31.194 |

51.860 |

7.733 |

|

9 |

163 |

5.43 |

2.650 |

17.628 |

25.356 |

31.604 |

34.338 |

88.588 |

12.654 |

|

10 |

115 |

3.83 |

6.500 |

22.619 |

27.201 |

33.947 |

39.892 |

86.551 |

11.353 |

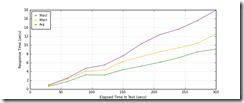

Graphs

Response Time: 30 sec time-series

![Load_Homepage_response_times_intervals[6] Load_Homepage_response_times_intervals[6]](/Data/Sites/1/media/wlw/load_homepage_response_times_intervals%5B6%5D_thumb.png)

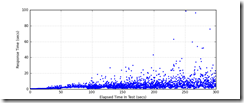

Response Time: raw data (all points)

![Load_Homepage_response_times[6] Load_Homepage_response_times[6]](/Data/Sites/1/media/wlw/load_homepage_response_times%5B6%5D_thumb.png)

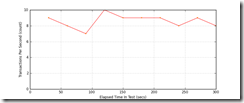

Throughput: 30 sec time-series

![Load_Homepage_throughput[6] Load_Homepage_throughput[6]](/Data/Sites/1/media/wlw/load_homepage_throughput%5B6%5D_thumb.png)

www.esdm.no-ip.co.uk

Timer Summary (secs)

|

count |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

5105 |

0.210 |

2.914 |

4.410 |

5.954 |

7.190 |

27.443 |

2.285 |

Interval Details (secs)

|

interval |

count |

rate |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

1 |

518 |

17.27 |

0.210 |

0.347 |

0.410 |

0.470 |

0.520 |

1.210 |

0.119 |

|

2 |

599 |

19.97 |

0.300 |

0.790 |

1.110 |

1.250 |

1.320 |

2.380 |

0.322 |

|

3 |

473 |

15.77 |

0.960 |

1.596 |

1.920 |

2.030 |

2.332 |

3.550 |

0.399 |

|

4 |

528 |

17.60 |

1.020 |

2.068 |

2.410 |

2.590 |

2.742 |

5.602 |

0.505 |

|

5 |

504 |

16.80 |

1.250 |

2.586 |

3.020 |

3.360 |

3.550 |

6.170 |

0.713 |

|

6 |

461 |

15.37 |

2.314 |

3.532 |

4.204 |

5.482 |

6.124 |

9.872 |

1.253 |

|

7 |

406 |

13.53 |

2.670 |

4.808 |

6.440 |

7.470 |

8.340 |

17.990 |

2.107 |

|

8 |

556 |

18.53 |

2.080 |

4.031 |

5.340 |

6.560 |

8.332 |

18.562 |

2.157 |

|

9 |

506 |

16.87 |

1.960 |

5.030 |

6.570 |

8.010 |

9.734 |

25.216 |

2.685 |

|

10 |

554 |

18.47 |

2.050 |

4.880 |

6.540 |

8.082 |

8.882 |

27.443 |

2.432 |

Graphs

Response Time: 30 sec time-series

![Load_Homepage_response_times_intervals[4] Load_Homepage_response_times_intervals[4]](/Data/Sites/1/media/wlw/load_homepage_response_times_intervals%5B4%5D_thumb.png)

Response Time: raw data (all points)

![Load_Homepage_response_times[4] Load_Homepage_response_times[4]](/Data/Sites/1/media/wlw/load_homepage_response_times%5B4%5D_thumb.png)

Throughput: 30 sec time-series

![Load_Homepage_throughput[4] Load_Homepage_throughput[4]](/Data/Sites/1/media/wlw/load_homepage_throughput%5B4%5D_thumb.png)

www.breconfans.org.uk

Timer Summary (secs)

|

count |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

6710 |

0.780 |

2.218 |

2.970 |

4.620 |

5.590 |

16.120 |

1.655 |

Interval Details (secs)

|

interval |

count |

rate |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

1 |

220 |

7.33 |

0.780 |

0.830 |

0.850 |

0.880 |

0.890 |

0.990 |

0.031 |

|

2 |

500 |

16.67 |

0.790 |

0.959 |

1.020 |

1.144 |

1.420 |

2.180 |

0.216 |

|

3 |

703 |

23.43 |

0.874 |

1.111 |

1.200 |

1.280 |

1.400 |

1.720 |

0.153 |

|

4 |

761 |

25.37 |

0.980 |

1.416 |

1.620 |

2.060 |

2.120 |

2.490 |

0.356 |

|

5 |

857 |

28.57 |

1.080 |

1.611 |

1.810 |

2.072 |

2.430 |

6.206 |

0.556 |

|

6 |

982 |

32.73 |

0.940 |

1.699 |

1.720 |

2.730 |

3.240 |

10.488 |

0.778 |

|

7 |

628 |

20.93 |

1.250 |

2.986 |

4.340 |

5.030 |

5.600 |

11.762 |

1.473 |

|

8 |

693 |

23.10 |

1.450 |

3.333 |

4.870 |

5.680 |

7.000 |

15.868 |

1.905 |

|

9 |

663 |

22.10 |

1.680 |

3.794 |

5.150 |

6.300 |

7.600 |

14.934 |

1.986 |

|

10 |

703 |

23.43 |

1.660 |

3.714 |

5.390 |

6.910 |

7.900 |

16.120 |

2.171 |

Graphs

Response Time: 30 sec time-series

![Load_Homepage_response_times_intervals[8] Load_Homepage_response_times_intervals[8]](/Data/Sites/1/media/wlw/load_homepage_response_times_intervals%5B8%5D_thumb.png)

Response Time: raw data (all points)

![Load_Homepage_response_times[8] Load_Homepage_response_times[8]](/Data/Sites/1/media/wlw/load_homepage_response_times%5B8%5D_thumb.png)

Throughput: 30 sec time-series

![Load_Homepage_throughput[8] Load_Homepage_throughput[8]](/Data/Sites/1/media/wlw/load_homepage_throughput%5B8%5D_thumb.png)

www.wikipedia.org

Timer Summary (secs)

|

count |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

2748 |

0.340 |

4.880 |

6.811 |

9.970 |

14.132 |

98.597 |

5.963 |

Interval Details (secs)

|

interval |

count |

rate |

min |

avg |

80pct |

90pct |

95pct |

max |

stdev |

|

1 |

278 |

9.27 |

0.340 |

0.643 |

0.780 |

0.930 |

0.970 |

2.990 |

0.264 |

|

2 |

269 |

8.97 |

0.570 |

1.689 |

2.240 |

2.484 |

3.030 |

5.304 |

0.796 |

|

3 |

239 |

7.97 |

1.944 |

3.265 |

4.040 |

4.716 |

5.542 |

7.652 |

1.096 |

|

4 |

303 |

10.10 |

0.750 |

3.182 |

4.310 |

5.482 |

7.074 |

18.540 |

2.366 |

|

5 |

293 |

9.77 |

0.880 |

4.424 |

6.282 |

7.574 |

9.214 |

26.812 |

3.156 |

|

6 |

297 |

9.90 |

0.960 |

5.225 |

7.410 |

10.330 |

14.050 |

29.854 |

4.526 |

|

7 |

290 |

9.67 |

0.830 |

6.111 |

8.510 |

12.400 |

15.470 |

43.068 |

5.177 |

|

8 |

252 |

8.40 |

0.930 |

7.125 |

9.380 |

13.642 |

21.808 |

62.750 |

6.843 |

|

9 |

278 |

9.27 |

0.870 |

8.470 |

10.430 |

15.573 |

23.071 |

98.597 |

10.443 |

|

10 |

249 |

8.30 |

1.170 |

9.083 |

12.550 |

17.946 |

23.132 |

75.871 |

8.477 |

Graphs

Response Time: 30 sec time-series

Response Time: raw data (all points)

Throughput: 30 sec time-series

Analysis

I am no expert in load testing, so I do not pretend to be able to analyse the results in any meaningful way. Looking to learn though…!

But bringing together the most significant statistics from these tests…

|

Measurement |

esdm.co.uk |

esdm.no-ip.co.uk |

breconfans.org |

www.wikipedia.org |

|

CMS |

DNN |

mojoPortal |

mojoPortal |

n/a |

|

Platform |

exeGesIS Telehouse hosting.

Windows Server 2003 + SQL Server 2000 |

exeGesIS Telehouse hosting.

Windows Server 2008 + SQL Server 2008 R2 |

Arvixe hosting.

Windows Server 2008 + SQL Server 2008 Express |

n/a |

|

Successful requests |

1088 |

5105 |

6710 |

2748 |

|

Minimum response time |

0.480 |

0.210 |

0.780 |

0.340 |

|

Average response time |

12.897 |

2.914 |

2.218 |

4.880 |

|

95pct response time |

28.932 |

7.190 |

5.590 |

14.132 |

|

Average requests / sec |

3.63 |

17.02 |

22.37 |

9.16 |

These identical tests put the web sites under heavy load, particularly after the first minute or so. It is clear that the two mojoPortal sites dramatically out-perform our old esdm.co.uk, with average response times of 2.9 and 2.2 seconds compared with 12.8 over the full test period. The reasons for this are far less clear though. esdm.co.uk and esdm.no-ip.co.uk have about the same amount of content, but this is not really a fair comparison between DotNetNuke and mojoPortal, because esdm.no-ip.co.uk is running on faster servers. Also, we are running the latest version of mojoPortal (2.3.7.5) compared to an ancient version of DNN.

Interestingly the minimum response time was 0.48 for esdm.co.uk, significantly faster than the 0.78 seconds for breconfans.org.uk, perhaps because the latter is on US hosting meaning a longer round-trip regardless of other factors; esdm.no-ip.co.uk gave 0.21 seconds minimum, perhaps demonstrating the inherent responsiveness of our new hosting stack.

I was surprised that breconfans.org came out better under load than esdm.no-ip.co.uk, but I suspect this is because the latter is a much larger site, with hundreds of pages (and so a much larger mojoPortal database) compared to about 25 pages for breconfans.

Testing wikipedia was an afterthought, and of course it is not quite the same because it is always under heavy load; nonetheless it was reassuring to find that my mojoPortal sites performed better, and that the wikipedia performance also degraded through the test as more virtual users were added (though this does make me wonder whether some of the performance degradation is client-side, e.g. inherent bottle-neck in processing http requests in Windows?).

Conclusion

Multi-Mechanize was simple to set up and use (admittedly in a limited manner). It quickly demonstrates what kinds of load web sites can sustain before degrading.

More advanced configuration of the test scripts, e.g. to perform form-based operations, should be reasonably easy for our developers. This should give real power for load-testing our web applications.

Aside: I found when running the longer tests with lots of threads (e.g. 100) that the routine would not complete after the allotted time, and would stick saying “waiting for all requests to finish”. Usually it would complete after a minute or two extra waiting. If not, no outputs were produced, but killing the python.exe process sometimes caused the output files to be created. This issue has been encountered by others, with no apparent resolution (e.g. http://groups.google.com/group/multi-mechanize/browse_thread/thread/706c30203c568d61)